I am a Ph.D. candidate in the Causal Artificial Intelligence Lab at Columbia University, working with Prof. Elias Bareinboim. I also work closely with Prof. Junzhe Zhang. Previously, during my Master’s studies at Brown University, I was fortunate enough to work with Prof. Michael Littman.

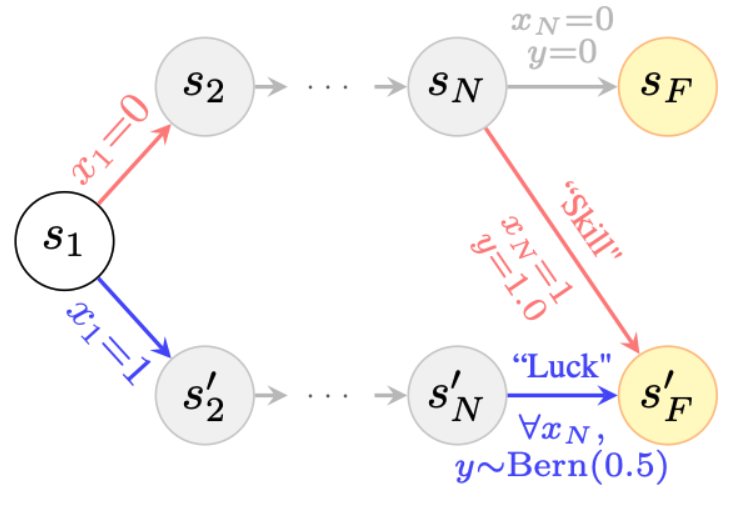

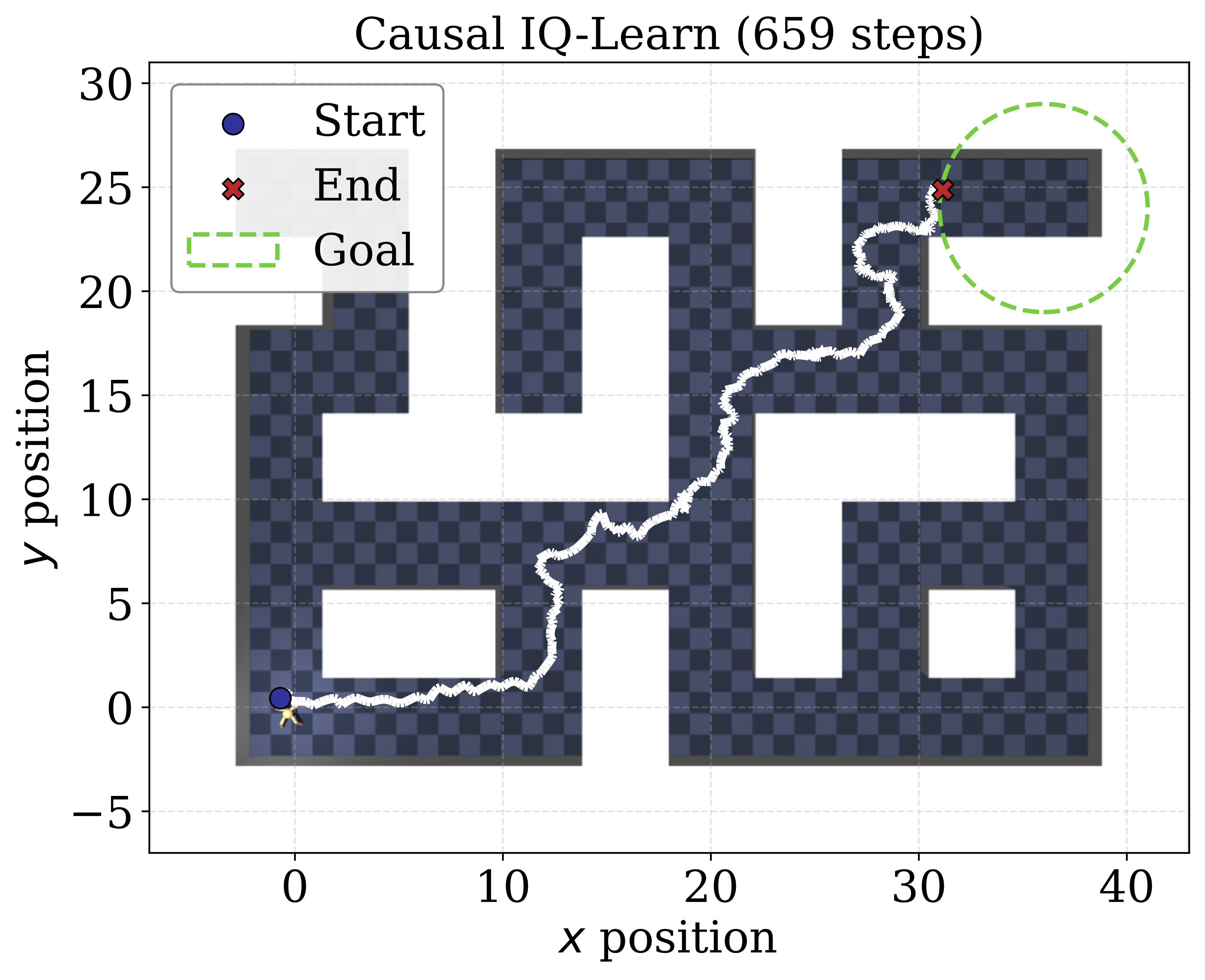

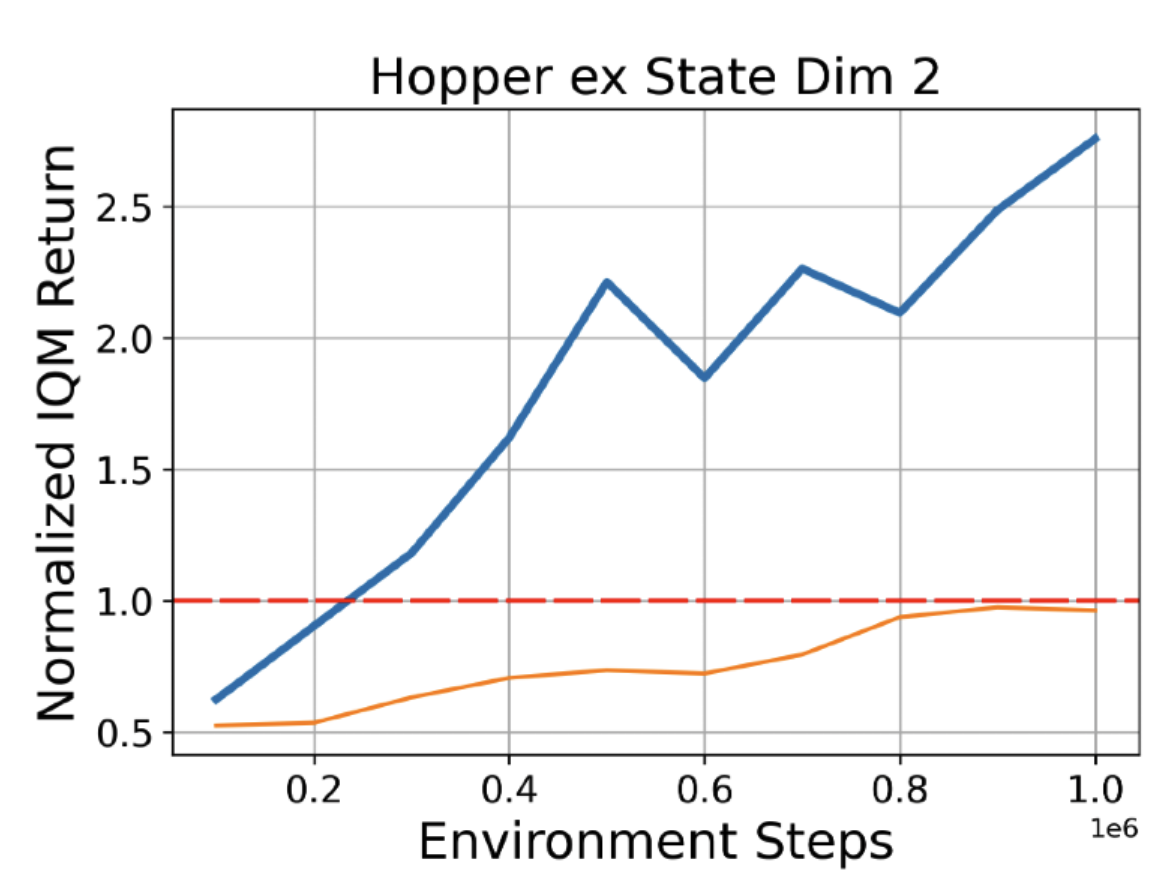

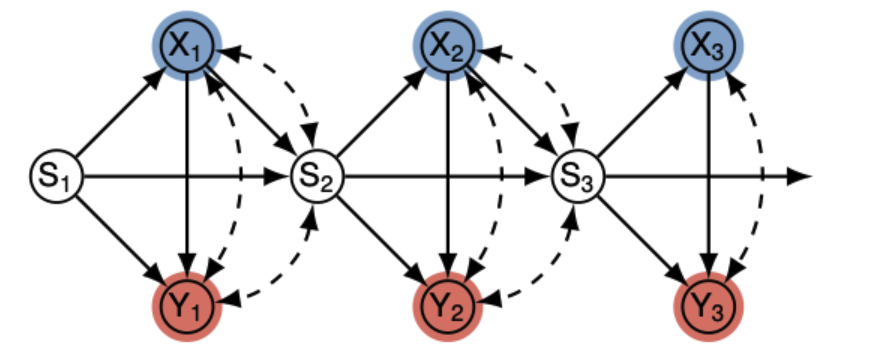

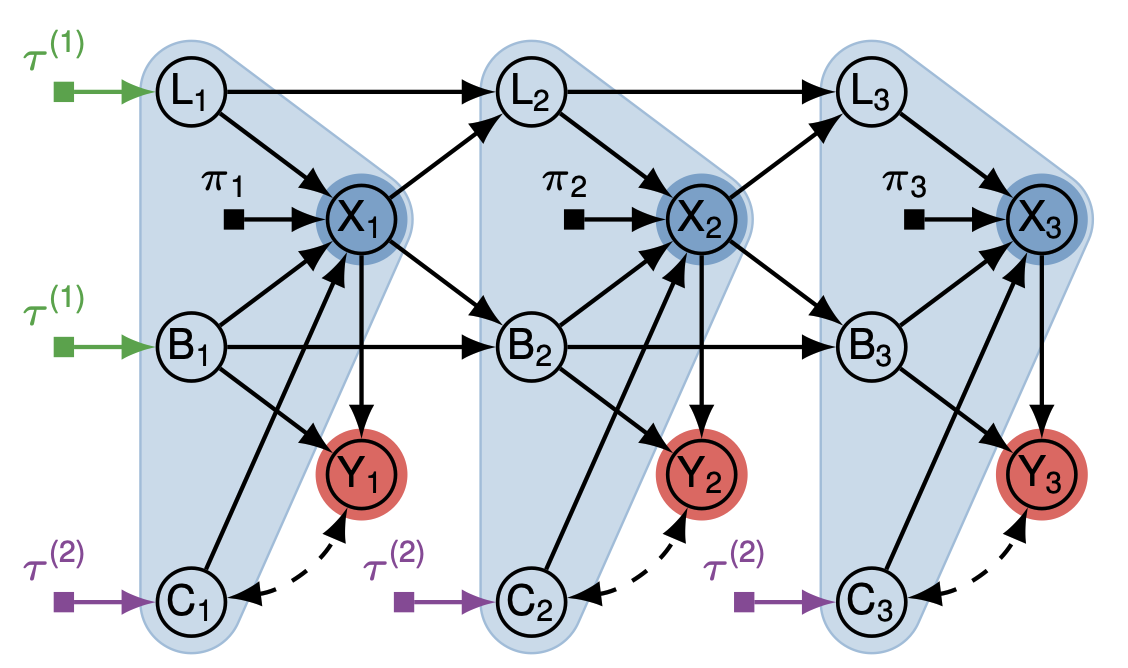

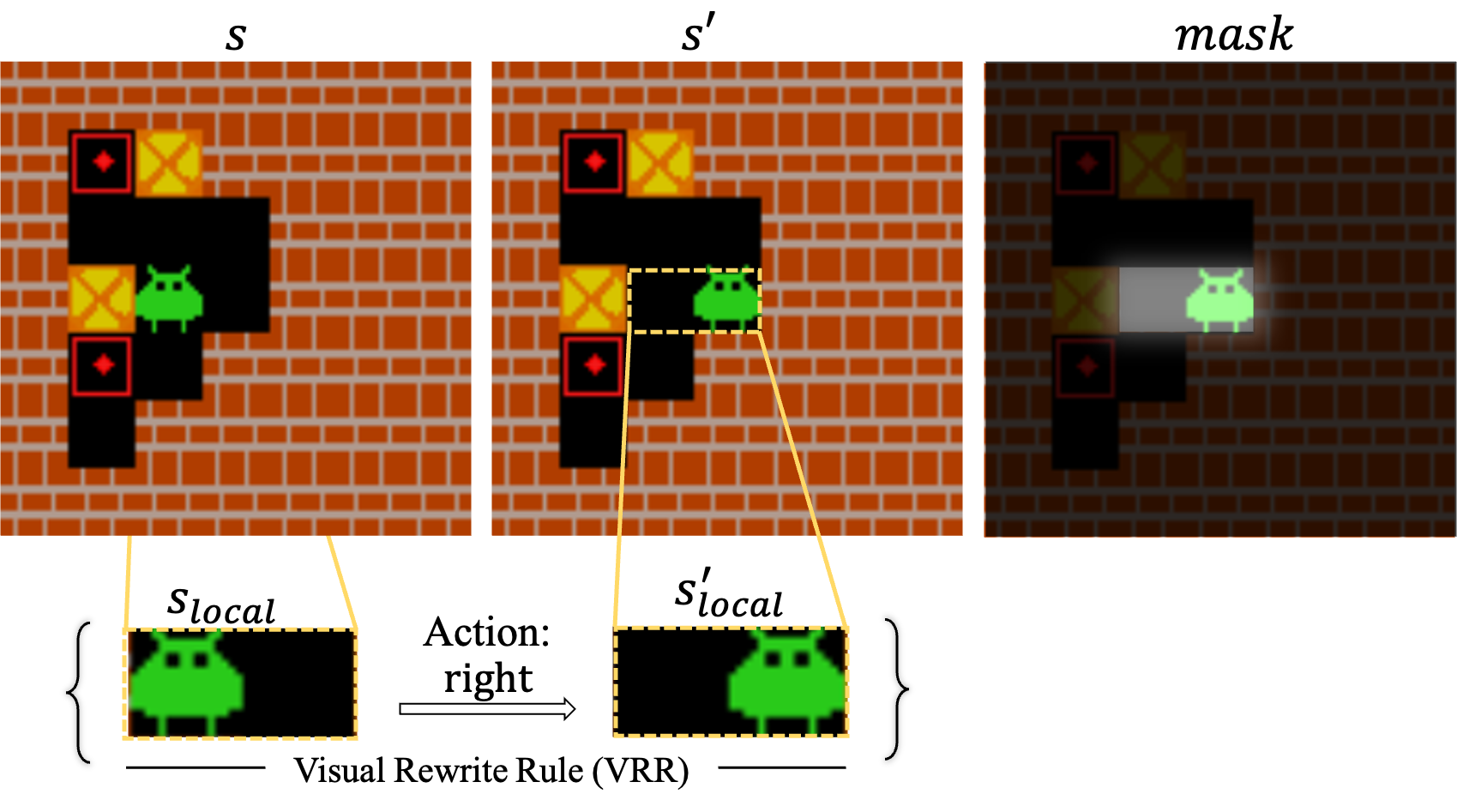

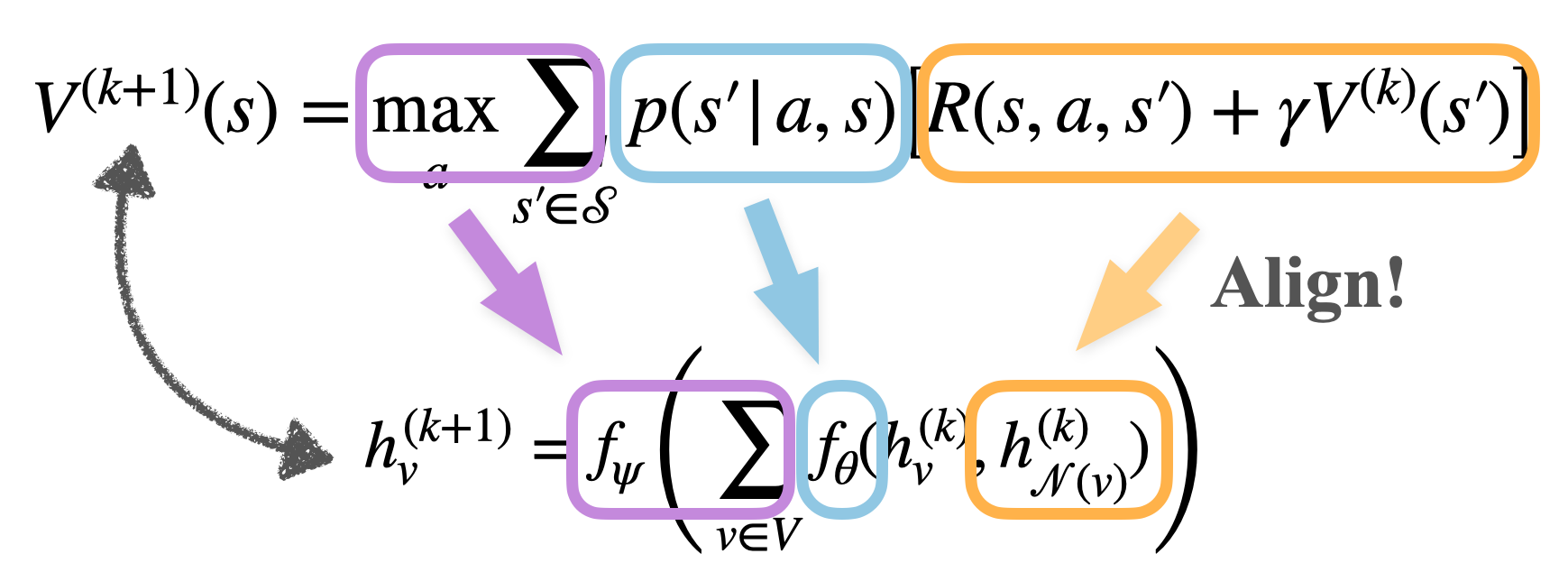

My research interest lies in the intersection of causal inference and reinforcement learning, especially in how to build a trusted, generalizable, and sample-efficient agent.

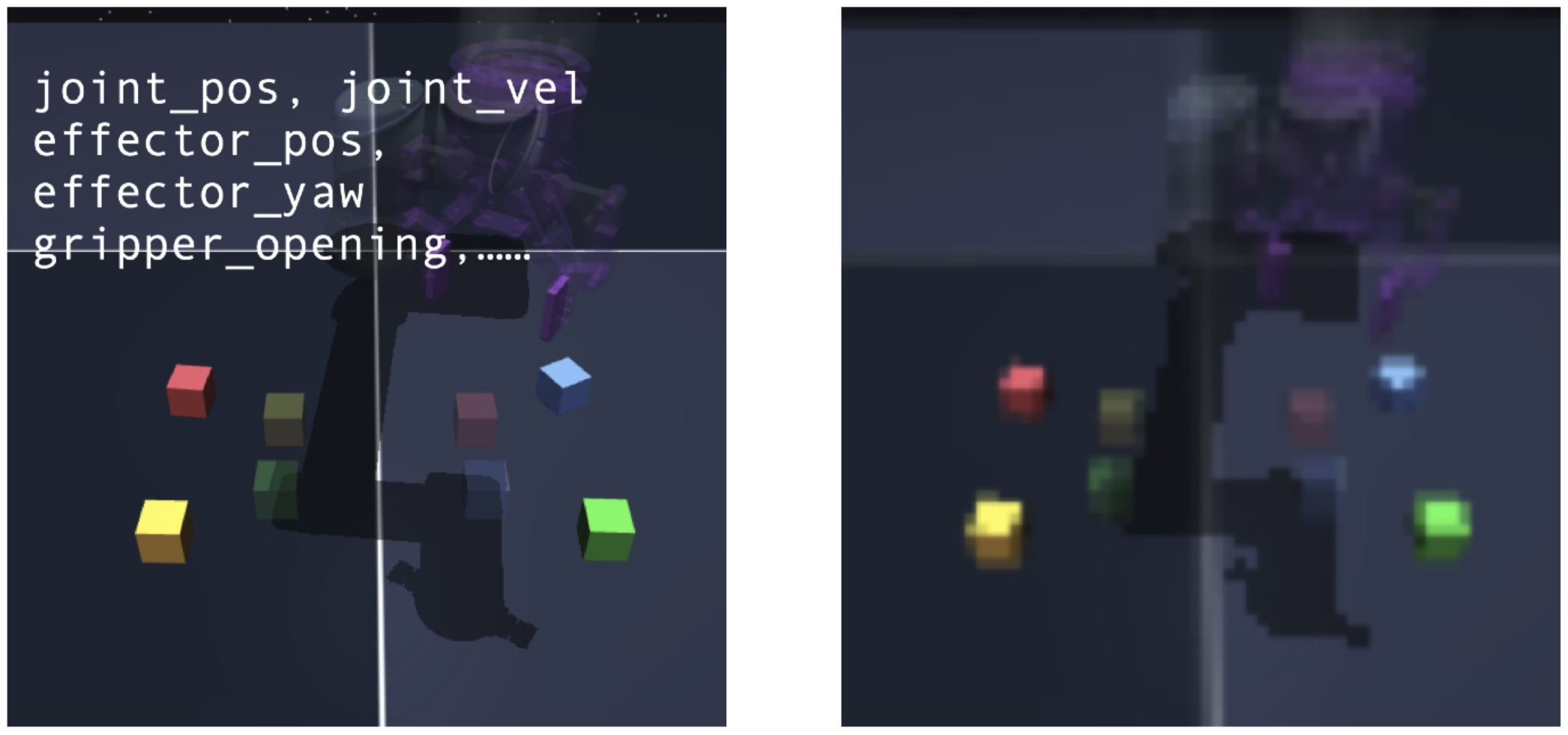

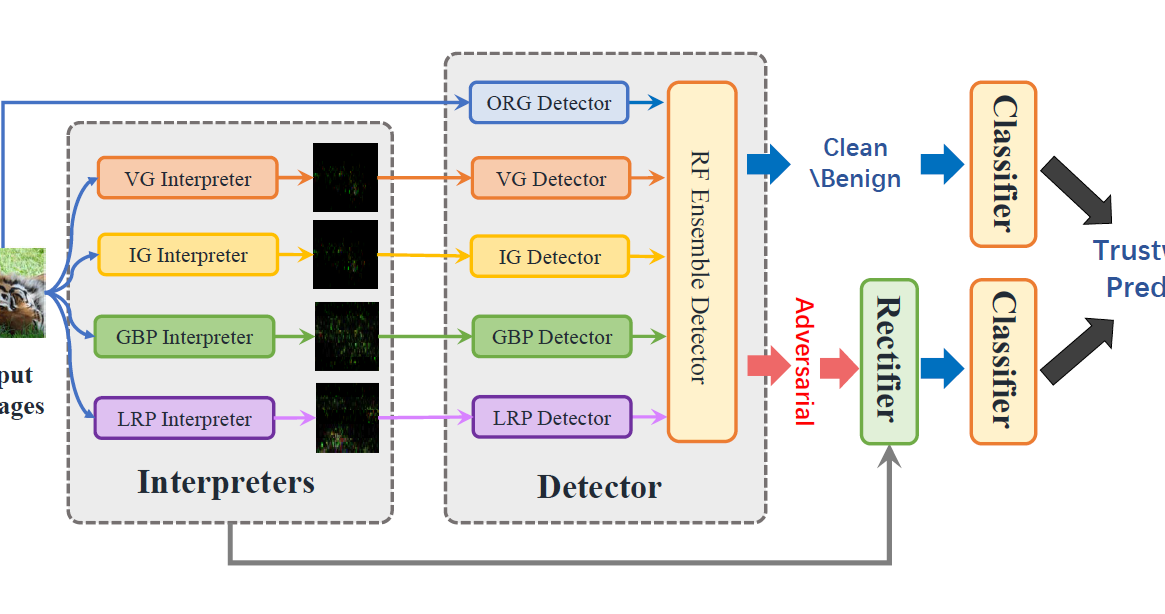

In the era of large foundational models, I believe a causally aligned agentic system is more important than ever. Beyond metric numbers, there are still many underexplored territories where classic problems like reward hacking, safety, alignment, and agent explainability/interpretability emerge again. My recent interest is in designing practical and scalable causal tools to mitigate those problems in language models, robotics and agentic systems.

Feel free to contact me if you are interested in working on causal reinforcement learning!

Jan. 2026: I am elected to be a

Jan. 2026: I am elected to be a